Researchers at the University of California, Los Angeles (UCLA) have developed a new deep-learning AI framework that can quickly analyze complex medical scans including MRIs and 3D medical images which has achieved accuracy levels comparable to clinical specialists. The research highlighting the system’s capabilities is published in Nature Biomedical Engineering.

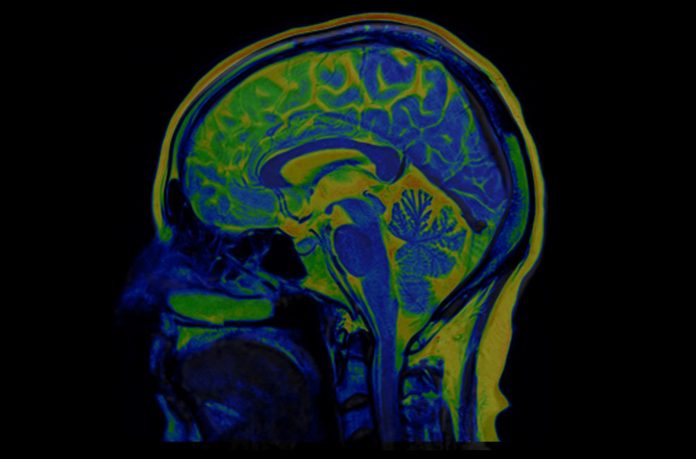

The model, called SLIViT (SLice Integration by Vision Transformer), represents a significant advancement in the field of medical imaging. Unlike existing models that typically focus on a single type of scan or specific diseases, SLIViT has been tested on a range of imaging data, including 3D retinal scans, ultrasound videos, MRI scans for liver disease assessment, and 3D CT scans for lung nodule screening. The researchers say this foundation could make it applicable in additional settings beyond those tested in this research.

Artificial neural networks, such as SLIViT, function by processing extensive datasets annotated by medical experts. Traditional 3D imaging is inherently complex, as these scans integrate depth along with length and width. This added dimension requires more detailed analysis, with a single 3D retinal scan potentially comprising up to 100 separate 2D medical images. Specialists typically need to spend several minutes closely examining these images to identify subtle disease markers.

“Compiling and annotating large volumetric datasets necessary for standard 3D models is not feasible with conventional resources,” noted co-first author Oren Avram, PhD, a postdoctoral researcher at UCLA Computational Medicine. “While several models exist, their training is often limited to one imaging type and a specific organ or condition.”

The SLIViT model differs from other AI models as it incorporates two AI components and a different learning approach that allows it to identify disease risk from multiple different volumetric modalities and is effective even with smaller training datasets.

“We show that SLIViT, while being a general model, consistently outperforms more specialized models,” said Berkin Durmus, a UCLA PhD student who participated in the research. “We show that SLIViT, despite being a generic model, consistently achieves significantly better performance compared to domain-specific state-of-the-art models. It has clinical applicability potential, matching the accuracy of manual expertise of clinical specialists while reducing time by a factor of 5,000. And unlike other methods, SLIViT is flexible and robust enough to work with clinical datasets that are not always in perfect order.”

Importantly, Avram said, their new system can be used as a foundation model that can be used to create future predictive models using medical images. It’s automated annotation can also benefit clinicians and researchers alike as it reduces data acquisition costs and duration.

Co-senior author SriniVas R. Sadda, MD, a professor of ophthalmology at UCLA Health said he was “thrilled” by the ability of SLIViT to perform in real-world condition and with a low number of training data sets. “SLIViT thrives with just hundreds—not thousands—of training samples for some tasks, giving it a substantial advantage over other standard 3D-based methods in almost every practical case related to 3D biomedical imaging annotation.”

The team is aware of the potential for biases in AI tools, which can adversely affect diagnostic accuracy and exacerbate health disparities. While the speed of SLIViT’s annotation process is impressive, the research team plans to ensure that any systematic biases are mitigated.

Moving forward, the UCLA researchers will broaden their research to include additional treatment modalities and to explore SLIViT’s potential for predictive disease forecasting. By addressing biases and enhancing the framework’s capabilities, they hope to facilitate early diagnosis to improve treatment regimens.