An AI tool for detecting brain cancer on imaging scans increases in accuracy when trained using pictures of camouflaged animals in the wild, an intriguing study shows.

The findings demonstrate how a machine learning model trained on one task can be repurposed for another, potentially unrelated, task using a different dataset in a process known as “transfer learning.”

The research, published in the journal Biology Methods and Protocols, is the first to apply camouflage animal transfer learning to deep neural network training for the detection and classification of tumors.

The method developed by the researchers also shines light on how the AI algorithm makes its decisions, which could improve trust among both medical professionals and patients.

Deep learning models can lack transparency and the networks in the current study were able to generate images showing specific areas covered in its tumor-positive or -negative classification.

Lead researcher Arash Yazdanbakhsh, PhD, from Boston University, explained that advances in AI allow more accurate detection and recognition of patterns.

“This consequently allows for better imaging-based diagnosis aid and screening, but also necessitate more explanation for how AI accomplishes the task,” he continued.

“Aiming for AI explainability enhances communication between humans and AI in general. This is particularly important between medical professionals and AI designed for medical purposes.

“Clear and explainable models are better positioned to assist diagnosis, track disease progression, and monitor treatment.”

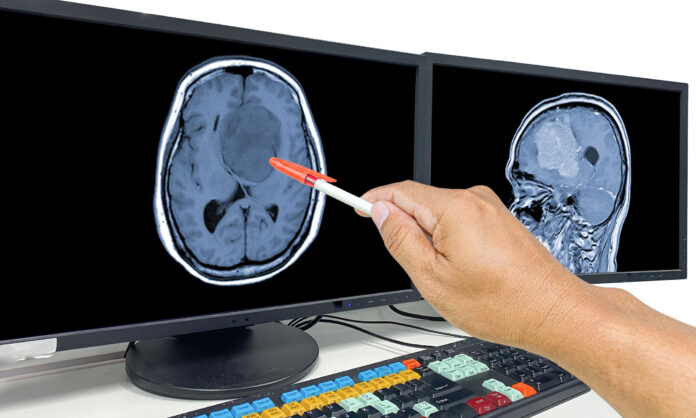

AI has shown promise in radiology, where delays in treatment can arise while waiting for technicians to process medical images.

Convolutional neural networks (CNNs) are powerful tools that use large image datasets to recognize and classify images, thereby learning to distinguish and categorize them.

While detecting animals hidden through camouflage involves very different images to finding brain tumors, parallels can be drawn between it and distinguishing cancerous cells blending into healthy tissue.

Yazdanbakhsh and co-workers examined how this might be of benefit using magnetic resonance images (MRIs) of cancerous and healthy brains held in online public repositories.

Initially, two neural networks—ExpT1Net and ExpT2Net—were trained for detection and classification of post-contrast T1 and T2 MRIs.

The aim was to distinguish healthy from cancerous MRIs, as well as identify the area affected by cancer, and the type of cancer it looked like.

The researchers found that the networks were almost perfect on normal brain images, with only one or two false negatives between both networks, demonstrating a strong ability to differentiate between cancerous and normal brains.

However, the networks struggled more with glioma subtype classification and showed modest performance, with T1Net having an average accuracy of 85.99% and T2Net trailing slightly behind at 83.85%.

The models were then trained using a camouflage animal detection dataset, consisting of almost 3000 images of clear and camouflaged animals divided into 15 categories.

Both transfer-trained networks achieved a higher mean accuracy than their non-transfer trained counterparts, although this was only statistically significant in the case of ExpT2Net (83.85–92.20%).

Applying camouflage animal transfer learning to deep neural network training was particularly promising for T2-weighted MRI data, which showed the greatest improvement in testing accuracy.

While the best performing proposed model was about 6% less accurate than the best standard human detection, the researchers maintained that the study successfully demonstrates the quantitative improvement brought on by this training paradigm.

Qualitative metrics such as feature space and DeepDreamImage analysis as well as image saliency maps gave an insight into the internal states of the trained models and effectively communicated the most important image regions from the network’s perspective while learning.

“These XAI [explainable AI] methods allow us to insightfully visualize what is happening as the models train on brain cancer imaging data and what characteristics it associates with different tumor classes, providing a dimension of analysis beyond accuracy metrics,” the researchers said.