A new artificial intelligence (AI) tool developed by researchers at the University of Michigan (U-M) can help inform surgeons, within 10 seconds, if any portion of a cancerous brain tumor that should be removed remains. Performance of the tool, called FastGlioma, is published in Nature, which showed that it outperformed conventional methods of detecting residual tumor tissue.

Gliomas are a type of brain tumor that are difficult to completely remove. Their infiltrative nature make completely removing these tumors challenging since it can be difficult for surgeons to distinguish between healthy brain tissue and residual tumor. Removing all tumor tissue is critical for improving patient outcomes, as residual tumor left behind can lead to tumor recurrence, a lower quality of life, and a higher risk of death.

“FastGlioma is an artificial intelligence-based diagnostic system that has the potential to change the field of neurosurgery by immediately improving comprehensive management of patients with diffuse gliomas,” said senior study author Todd Hollon, MD, a neurosurgeon at U-M Health. “The technology works faster and more accurately than current standard of care methods for tumor detection and could be generalized to other pediatric and adult brain tumor diagnoses. It could serve as a foundational model for guiding brain tumor surgery.”

During brain tumor surgeries, neurosurgeons commonly use MRI or fluorescent imaging techniques to locate any remaining tumor tissue. But using MRI requires additional intraoperative equipment and fluorescent imagining is not applicable to all tumor types both of which limit their widespread use.

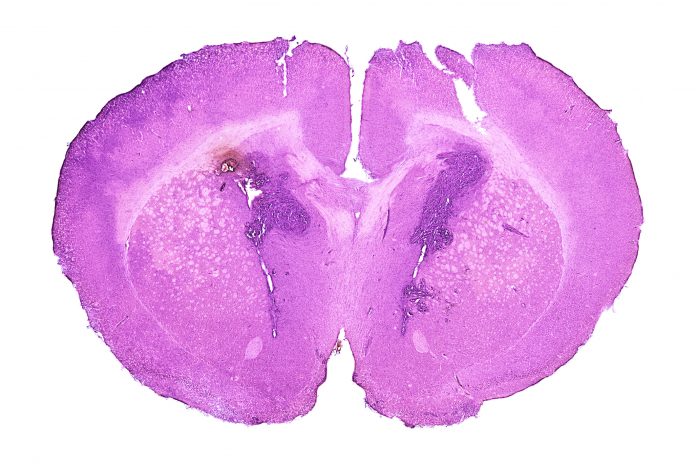

But FastGlioma could change the way surgeons approach brain tumor resections. It works by combining high-resolution optical imaging, specifically stimulated Raman histology, with foundation AI models, which have been trained to classify images with high accuracy. Stimulated Raman histology, developed at U-M, provides quick, detailed images of tumor tissue, and the AI model analyzes these images to identify cancerous cells, in real-time. The AI model was trained using a dataset of more than 11,000 surgical specimens and four million unique microscopic views.

In an international study of Fast Glioma, neurosurgery teams analyzed new, unprocessed tissue samples from 220 patients who had surgery for low- or high-grade diffuse glioma. The tool calculated how much tumor remained with an accuracy rate of 92%, while missing residual tumor tissue only 3.8% of the time, a vast improvement over that miss rates of around 25% for current technologies.

“This model is an innovative departure from existing surgical techniques by rapidly identifying tumor infiltration at microscopic resolution using AI,” said Shawn Hervey-Jumper, MD, co-senior author and a professor neurosurgery professor the University of California San Francisco. “The development of FastGlioma can minimize the reliance on radiographic imaging, contrast enhancement, or fluorescent labels to achieve maximal tumor removal.”

The AI tool operates in two modes: full-resolution, which takes about 100 seconds to acquire an image, and fast mode, which takes just 10 seconds. The fast mode, while slightly less accurate at approximately 90%, is still highly effective in providing real-time feedback to surgeons, informing them if further tumor resection is necessary.

“This means that we can detect tumor infiltration in seconds with extremely high accuracy, which could inform surgeons if more resection is needed during an operation,” Hollon said.

The potential of FastGlioma extends beyond gliomas, its developers believe.

“These results demonstrate the advantage of visual foundation models such as FastGlioma for medical AI applications,” said co-author Aditya Pandey, MD, co-author and chair of the department of neurosurgery at U-M Health. “In future studies, we will focus on applying the FastGlioma workflow to other cancers, including lung, prostate, breast, and head and neck cancers.”