Corey Chivers, a senior AI scientist at the digital pathology company Proscia, dreams of a future where doctors have simple access to AI-based apps that help them analyze and understand data, which in turn helps them diagnose and treat every patient correctly.

Getting there requires several key elements, including building bespoke models for tasks like identifying AI-based biomarkers. But this process takes astronomical amounts of data and significant human and computational resources, including massive amounts of compute power organized into a proven architecture to support clinical-grade analysis.

According to Chivers, it would be really helpful not to have to start from scratch for all the different potential iterations of different applications, such as digital pathology, which ultimately boils down to analyzing and interpreting an image.

Chivers and Proscia have taken a step toward that vision, announcing the release of Proscia AI, a digital pathology platform for building AI algorithms to support applications like biomarker discovery, clinical trial optimization, and companion diagnostics advancement. Proscia AI consists of two key parts: Concentriq Embeddings and the Proscia AI Toolkit, which have been integrated into Proscia’s digital pathology platform, Concentriq.

“A large portion of doing these research projects and developing AI solutions is in all of that upstream stuff of coordinating data, compute, and infrastructure pipelines,” said Chivers. “We take care of all of that.”

Digital pathology history 101

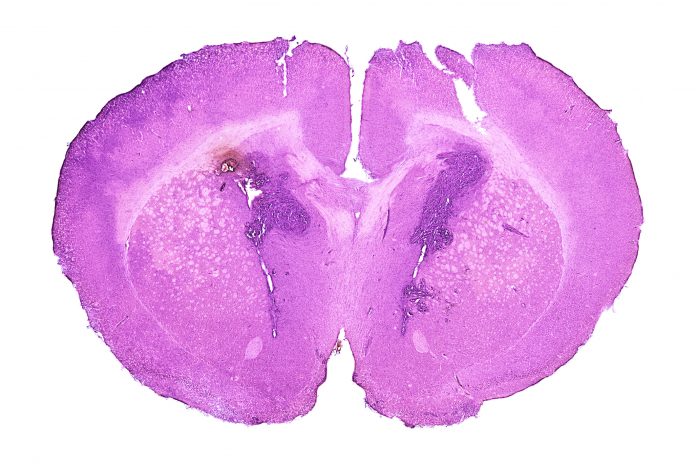

To understand what Proscia AI does, it helps to understand the evolution of developing digital pathology solutions. In a nutshell, this involves taking gigapixel images of slides to train a model to link them to diagnoses or biomarkers.

Until recently, this was a slow process that required a lot of handholding because the available computational tools, architecturally simple and naive neural networks, needed to be “supervised” with labeled data to “learn” attributes, such as shape and texture, from a sea of pixels to distinguish between diseased and uninfected samples.

According to Chivers, people began to realize that, especially in the medical domain, large labeled data sets are scarce because they are extremely expensive to collect and difficult to curate. Much more unlabeled data is readily available to be analyzed, though it requires a whole new level of compute power.

The introduction of self-supervised learning, which does not require labeled data, sparked a renaissance in digital pathology and medical (and non-medical) domains other than images. When AI joined the fray, these self-supervised models could chug through massive amounts of unlabeled data scraped from all corners of the internet or archives, leading to a concept called foundation models. These machine learning or deep learning models are trained on such broad amounts of data that they can be applied across a wide range of use cases.

“In pathology, we have slides that have been digitized with records that are not great or have no record of an associated pathology report, but we have the image,” explained Chivers. “If you simply take large piles of digitized slides and begin building these foundation models that really see the core concepts of biology, it can abstract the ideas of cell texture and morphology and put them into vectors of numbers called embeddings.”

The development of foundation models is no small feat, and there seems to be an ongoing arms race to see who can use the greatest quantity and diversity of data to build the best foundation model for digital pathology. The development of a foundation model called Virchow via a collaboration between Paige and Microsoft commanded news headlines for raising the bar by using data from around 100,000 patients, equivalent to about 1.5 million H&E-stained whole slide images (WSIs).

Foundation models at the fingertips

In creating their own tools, Chivers and Proscia scientists realized that developing application-specific models for analyzing images would be far more accessible if people didn’t have to develop foundation models to generate embeddings that could be readily utilized for downstream applications. So, instead of releasing another foundation model, Proscia built an entire engine called Concentriq Embeddings to enable researchers to go from images to embeddings as quickly as possible.

“The genesis of Concentriq Embeddings was to put right at the fingertips of the researcher the place where you now want to start when you are developing these models, which is not from scratch but from starting from the embedding that comes from a foundation model or possibly multiple foundation models,” said Chivers. “We thought this is something developers and other researchers could use… so that people could start with the embeddings… When you start with just embeddings, which are really just vectors of numbers, you can actually build models with much less development time and compute resources than you would have needed in the past.”

Chivers said Concentriq Embeddings was developed to enable researchers to experiment with a variety of models and begin running comparisons and benchmarks against all of the different models for the task of interest, as there is no way to predict which model will excel at a given task. Concentriq Embeddings provides access to four powerful openly licensed foundation models—DINOv2, PLIP, ConvNext, and CTransPath—with plans to add new models as they evolve continuously. Though geared toward whole slide images (WSIs) with an H&E stain, Chivers said the Concentriq Embeddings is compatible with bright field images and any scanner to generate embeddings.

Chivers explained, “Users can get embeddings across everything consistently; even if they come from different scanners, objective powers, and so on, you can still get consistent results across the board. It removes much of the previously painful stuff from the development pipeline.”

None of this would be functional without massive amounts of compute power. To leverage the proprietary data and execute these foundational models in workflows, Proscia has developed a compute infrastructure using GPU servers. According to Chivers, simply getting the gigapixel images to split out into patches and passing through these models is a huge orchestration challenge.

Closing the loop

Along with Concentriq Embeddings, Proscia has released the Proscia AI Toolkit, a collection of open-source tools meant to facilitate the advancement of AI in the life sciences and hasten its widespread adoption. With these tools, teams can incorporate Concentriq Embeddings into their processes, which Chivers hopes will free up valuable time to concentrate on AI model development rather than technical details.

Chivers is optimistic that the Concentriq community will continue to grow and that users will contribute their own tools, expanding the library with innovative techniques and advances. This community-driven, collaborative method will increase the usage of AI on the Concentriq platform for model building, visualization, and deployment and improve the implementation of foundation models.

At this point, Chivers stated that Proscia AI is focused on researching and developing tools or products based on AI modeling, such as biomarkers. However, the ultimate goal is to apply the same process used to create embeddings for a research setting to the clinical setting. For example, if Proscia AI is used to develop a biomarker, the same method can be used to diagnose patients.

“We want to be able to close this loop—if you build something with this tool, you can finish the life cycle by releasing it as an application, which is then very easily deployable on our platform because all of the connectors are present,” said Chivers.

Because Proscia has already developed good lab practices and standardized pipelines for the existing Concentriq platform, the models created with Proscia AI can be deployed in a way designed to pass regulatory hurdles.

Chivers said that this version of Proscia AI is just the company’s first offering and will grow with technological trends. According to Chivers, the “big thing right now” is vision language models—techniques for essentially aligning the embeddings between an image and text, such as that of a clinical report.

After that, Chivers said, “The sky is the limit. The more modalities you can combine, the more powerful these tools become, including diagnostic tools. The more modalities can be fused together, the better. We believe genomic data will be the next one after text, followed by every other mode imaginable.”